Current audio language models are predominantly text-first, either extending pre-trained text LLM backbones or relying on semantic-only audio tokens, limiting general audio modeling. This paper presents a systematic empirical study of native audio foundation models that apply next-token prediction to audio at scale, jointly modeling semantic content, acoustic details, and text to support both general audio generation and cross-modal capabilities.

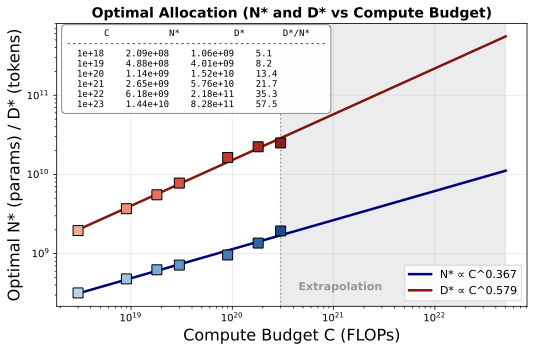

We provide comprehensive empirical insights for building such models: (1) We systematically investigate design choices—data sources, text mixture ratios, and token composition—establishing a validated training recipe. (2) We conduct the first scaling law study for discrete audio models via IsoFLOP analysis on 64 models spanning 3×1018 to 3×1020 FLOPs, finding that optimal data grows 1.6× faster than optimal model size. (3) We apply these lessons to train SODA (Scaling Open Discrete Audio), a suite of models from 135M to 4B parameters on 500B tokens, comparing against our scaling predictions and existing models.

SODA serves as a flexible backbone for diverse audio/text tasks—we demonstrate this by fine-tuning for voice-preserving speech-to-speech translation, using the same unified architecture.

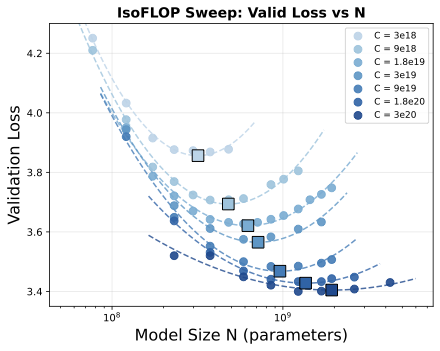

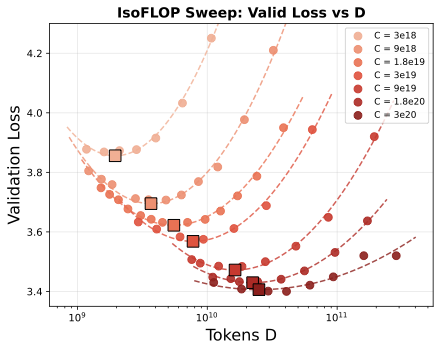

We conduct an IsoFLOP study for discrete audio models on 64 models spanning 3×1018 to 3×1020 FLOPs. For each compute budget, we train models of varying sizes and identify the configuration achieving the lowest validation loss.

The fitted scaling laws reveal: N* ∝ C0.367 and D* ∝ C0.579 — optimal data grows 1.6× faster than model size, unlike Chinchilla's equal scaling. This aligns with the intuition that discrete audio tokens (~100 tokens/sec) carry lower information density than text, favoring more data over larger models.

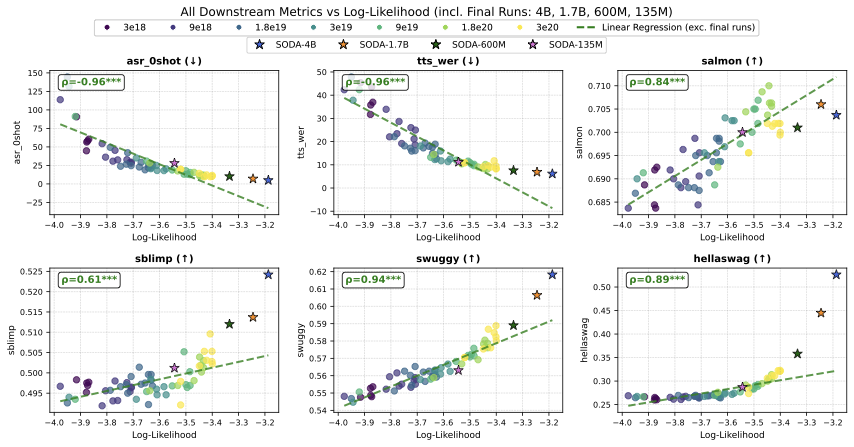

Before conducting IsoFLOP analysis, we validated that validation loss (NLL) on held-out audio data strongly correlates with downstream task performance across all 64 models.

*Speech prompts and corresponding baseline outputs are taken from existing project page demos (e.g., SIMS, SpiritLM, Llama-Mimi).

1) SpiritLM and SIMS model only semantic tokens with fixed vocoders, hence cannot maintain voice characteristics in their continuations.

2) Llama-Mimi and SODA model both semantic and acoustic tokens, allowing them to maintain voice characteristics.

Source: SIMS Demo

Transcript: This is a story of a tiny talking computer

Model: SIMS-Qwen2.5-7B

Transcript: these computers are now in the pockets on the desks in the cars of many of you think of your names know where you

Transcript: because it eventually wasn't quite enough and through a number of life-changing times it's about a computer that changes the notion of who we are but how does it do any

Source: SIMS Demo

Transcript: The capital of England is

Model: SIMS-Qwen2.5-7B

Transcript: and ever has been the center of the wheel of Finance and here, the price of everything and everywhere

Transcript: Do you know what the City of London is? The city is the third largest city in the vertical index. It functions like analogy, but it doesn't use that word. The city of London was first created

Source: SpiritLM Demo (to demonstrate their expressive style transfer)

Transcript: Ha ha no I think you're thinking about Days of Thunder, which is a Tom Cruise racing movie

Model: SpiritLM-7B-Expressive

Transcript: oh God yeah this it was amazing was it was it the one where he was a monster between rocks and stars was

Transcript: yeah I got this idea for a girl who wants to know what life is like but important day for her is

Source: Llama-Mimi Demo

Transcript: 1,2,3,4,5,6

Model: Llama-Mimi-8B

Transcript: 7, 8, 9, 10, 11, 12, 9, 8 [5-second silence]

Transcript: 7, 8, 9, 10, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 1, 2, 3, 4, 5, 6

Source: Llama-Mimi Demo

Transcript: This reality begins to explain the dark power

Model: Llama-Mimi-8B

Transcript: of the evil craftiness of the two brute stocks which might be unduly impatient of the painful rustlings of their hair and the loud bellowings of their dogs the constant efforts of the buddhists in the regions of the southern seas for an indefinite time

Transcript: influence, and intensity of life. This is Celestial Energy intense changes in life, contracts with expansion uplift and turns to down. Of harness, loss of life is true a

Description: A person speaks with some background music

Transcript: The Internet combines elements of both mass and interpersonal communication

Transcript: When I'm majoring in the engineering management and structure design program, I get the opportunity to work on the Sovereign West project.

Description: Multi-speaker recording between male and female

Transcript: [S1] Welcome to this English test! I'm John. What is your name? [S2] Hi, John. I'm Sarah. [S1] Do you work or are you a student?

Transcript: I'm a student. Do you live or are you staying this year? I live in apartment number five. What does your name say after six?

Transcript: concord returned to its place amidst the tents

This utterance is taken from the test set of librispeech on hugging face

Transcript: Joe Keaton disapproved of films, and Buster also had reservations about the medium.

This speech is generated by our AI model and it is not real

lord kingsdown funded the kingsdown church

Lord Kingsdown funded the Kingsdown church

just then leocadia came to herself and embracing the cross seemed changed into a sea of tears and the gentleman remained in utter bewilderment until his wife had repeated to him from beginning to end leocadia's whole story and he believed it through the blessed dispensation of heaven which had confirmed it by so many convincing testimonies

Just then Leo Kadya came to herself, and embracing the cross, seemed changed into a sea of tears, and the gentleman remaining in utter bewilderment, until his wife had repeated to him, from beginning to end, Leo Kadya's whole story, and he believed it, through the blessed dispensation of heaven, which had confirmed it by so many convincing testimonies.

@article{soda2026,

author = {Manakul, Potsawee and Gan, Woody Haosheng and Bartelds, Martijn and Sun, Guangzhi and Held, William and Yang, Diyi},

title = {Scaling Open Discrete Audio Foundation Models with Interleaved Semantic, Acoustic, and Text Tokens},

journal = {arXiv preprint arXiv:2602.xxxxx},

year = {2026},

}